One of our valued clients requested Sustainable ICT to undertake research into industry trends and technological advances with respect to industrial control networks and management services. The client was particularly interested in opportunities for team consolidation, service optimisation, and software defined networking within an industrial context.

This work would inform preparation for IT and OT convergence and identification of opportunities to optimise ICT asset expenditure. Whilst full details cannot be shared, the following is a subset of this research which has been approved for sharing for the benefit of others in the industry.

As part of a review of the current operation and elicitation of a future state architecture, it is important to explore industry direction and research findings. The following outlines current trends and insights with respect to industry leaders, researchers and technology direction which will influence the future state of a converged IT and OT asset operation.

IT and OT Convergence

In 2011 Gartner first espoused the opportunity for CIO’s to make optimal use of ICT assets and management practices across both Information Technology (IT) and Operational Technology (OT) functions [1]. Evaluation of convergence, alignment and integration opportunities between IT and OT was promoted as a means through which industrial enterprises could maximise the value of their investment in common services (business and technical) and exploit the value of information flows throughout the enterprise [2].

In recent years the pace at which large enterprises undertook such convergence has increased, with the likes of BHP Billiton, Rio Tinto, Roy Hill and Gold Fields Australia undertaking such effort through to 2017 [3]. Such endeavours sought to make best use of operational and information technology whilst establishing a platform through which to drive business innovation [4]. The strategy is not limited to well-known Australian companies however. The convergence of OT and IT has been evidenced globally as an imperative for creating a connected enterprise and delivering contextually meaningful information to workers regardless of location [5]. Looking to the future, through convergence of operations and technologies, research suggests enterprises will be more capable of exploiting opportunities envisioned in Industry 4.0 such as Internet-Of-Things (IoT) Sensor networks, Machine Learning (ML), and Cloud services into traditionally rigid industrial environments [6] [7].

In review of vendor and supplier information there are many internationally recognised industrial control vendors promoting the move to new ways of working and convergence. Rockwell Automation, for example, released its Connected Enterprise Execution Model, including associated maturity model, for manufacturing and industrial operators. The model aims to assist industry in understanding the technical and cultural changes necessary to take up the opportunities present in IoT, data analytics, scalable computing and mobility [8] [9] [10].

“We often find that less effective legacy processes are in place, and that those processes and work flows have not been designed to take advantage of the OT/IT convergence and the significant benefits available from the connected enterprise.”

Keith Nosbusch, CEO, Rockwell Automation

Such insights are quite poignant given the recent organisational restructuring and redundancies, and may go some way towards answering many of the questions that remain amongst the “old-guard”. Moreover, when viewed through the lens of the [the clients] Strategy of 2018 [6], the significance of successful convergence should not be understated. For the organisation to realise its cost reduction goals and take full advantage of innovations such as IoT, Automation and Smart Metering in the market (amongst many others), the successful convergence of teams, processes and technology should be considered essential.

It is widely acknowledged that the cultural and specialisation differences between IT and OT teams, and the lack of a central governing organisation for OT strategy, contributes to the formation of organisational silo’s [11]. Notwithstanding the need to up-skill IT resources/teams, there is a need for the converged organisation to understand that OT is the core business of the Oil and Gas sector [11] and therefore cost is not a primary driver as is evident in typical IT cost centres. Furthermore, given this fact, the mature organisation would manage the technology footprint as an asset on equal, or near equal, footing as a plant, however this is often not the case. Failure to service, replace and manage the electronic asset risks failure to the safe operation of the physical asset in today’s OT world.

Whilst the expected benefits associated with convergence are reinforced throughout available literature, there is less information espousing the opportunities with respect to operational management processes. However, global digital transformation research body Atos highlights that harmonised IT/OT strategies, governance models and business processes, enabled through a central team of multi-disciplinary team members, are essential components for successful IT/OT convergence [12].

Whilst industry advocates convergence to adequately position an organisation to take advantage of cost reduction opportunities, market innovation and information value [13], there is a need to consider how the underlying technologies are also evolving to become a foundation for such opportunities rather than a constraint.

Technology Convergence and Consolidation

Historically, industrial network designs have been comprised of a sizeable switching infrastructure footprint, rigidly defined network segments and security controls which reflect the control system function, physical location and an industry legacy. In such environments it has been the responsibility of network managers to translate organisational policy into low level network configuration commands and rules. As a result, adapting to change whilst managing the network can be challenging and error-prone [14]. Similarly, the design and deployment of server infrastructure for DCS or SCADA environments, until recent years, had been confined to the deployment of physical server hardware with redundant physical nodes to meet reliability and availability requirements. Overall, these aspects have impacted industrial operator’s ability to adapt to changing economic conditions and constrained the businesses ability to develop and/or maintain a competitive advantage [12].

It is important to acknowledge however, that the nature of the technology environment in industrial operations reflects the criticality of technology to production and the real threat to personal safety in the event of loss of control. These aspects have impacted the pace of change within operational technologies as compared with IT where the consequences of failure are less extreme. In recent years however, a number of technological improvements in IT networking and hosting have shown growing usage of traditionally IT solutions in the OT environment.

Virtualisation technology has become a mainstream approach to running compute workloads in data centres and is proven as a means of reducing maintenance, power and cooling of hardware for compute workloads. Industrial control vendors have also been progressively moving towards new generation systems which embrace both virtualisation and “software defined” technical capabilities. This has been driven through reduction in processor overheads and improvements in I/O performance [14]. Industrial control vendors have also recognised that virtualisation and software defined solutions will reduce the amount of production downtime and deliver reduced expenditure over the life of the asset [15].

Along with compute virtualisation, the virtualisation of the data storage layer for compute has matured in its scale and performance, and has equally gained recognition in the production control field [16] [17] [18]. There are three core types of storage connectivity; Direct Attached Storage (DAS), Network Attached Storage (NAS) and Storage Area Network (SAN). Whilst the objective here is not to elaborate on the difference between the technologies, it is important to highlight that SAN solutions carry block level data and have evolved to use Ethernet as the underlying transport for data through Fibre Channel over Ethernet (FCoE) and Internet Small Computer System Interface (iSCSI). These protocols, along with advancements in object based storage, have enabled the adoption of virtualisation technologies for storage solutions [18]. Virtual storage enables more effective addressing of storage across physical devices, which can span multiple locations, to significantly increase data security and integrity without impacting performance. Through the adoption of a storage cluster spanning two physical sites, in an active-active configuration, a single storage solution can provide automated fail-over, with zero data loss and near-instantaneous recovery [19] for a significant volume of virtualised hosts.

At the network layer, advances in virtualisation of network functions is driving considerable change across industries. The separation of network control from forwarding function through network virtualisation, called software defined networks (SDN), has gained significant interest as a means of simplifying network management and facilitating network evolution. The Open Networking Foundation, a non-for-profit organisation promoting the adoption of open standards based software defined networking, describes software defined networks as offering [20];

- Directly programmable due to its abstraction from underlying functions

- Agile through its ability to meet changing requirements

- Centrally managed through a global view of the network

- Programmatically configured to allow managers the ability to leverage automation

- Open standards based to simplify network design and operation

The history of programmable networks goes as far back as 1995 when the Open Signalling working group initiated the first investigation into separation of hardware and control software [14]. Today, the promise of SDN, particularly in support of industrial automation systems, is in its ability to enable more effective control system distribution, quality of service, resilience, compliance and to gain a more detailed view of the network and traffic under management [21] [22]. The separation of functions which control how one device interacts with its neighbour (control plane) from the functions which control how packets are parsed, forwarded and quality of service is met (forwarding plane) provides operators with improved capabilities for testing and resource management, whilst enabling isolation of traffic flows which introduce security and operational benefits [23].

SDN is not a silver bullet however, as it comes with some challenges; it changes the security model and tooling required of the management team [20], the SDN controller represents another source of failure [24], and there is a lack of familiarity with dynamic infrastructure across the workforce [25]. Of the challenges articulated in the available literature, the threat of security attacks against the centralised SDN controller is expressed as a primary risk to SDN within industrial control [21] [22]. This reality requires consideration of evolving network security controls.

Cybersecurity for Industrial control

Traditionally, control system vendors and OT networks have laggard IT when it comes to advancing cyber-security controls since such environments have typically been physically separated and relied on specialist hardware and software [26]. The commoditisation and reliability of standardised IP based network communications, combined with an increased ferocity and sophistication of business information requirements has driven change to industrial control system design and capabilities.

Multi-tiered security solutions through perimeter firewalls, access control lists (ACL), network zoning (to name a few) were typical controls for the mainstay of industrial control network designs. Today however, contemporary industrial control system designs are evolving to consider a more ubiquitous security approach enabling such capabilities as policy based control with dynamic response and reconfiguration [21]. Such capabilities are arising through the advent of software defined networking, and the potential for a significant reduction in business security risk in control systems is recognised throughout all available literature.

Where traditional networks are made up of a complex mesh of physical appliances, rules, and permissions which are manually implemented and maintained [14], industry research on Software Defined Networking is positing multi-level protection strategies and a reduction in physical appliances through centralisation, application flow whitelisting, inbuilt anomaly detection and the introduction of human-assisted automation solutions for security monitoring and supervision [21]. A noteworthy inclusion is application flow whitelisting, due to its similarity with Application Whitelisting referred to in ASD guidelines and IEC-62443. In the context of contemporary control network design, flow whitelisting represents the definition of allowable “streams” between end-points and/or networks. This presents several management and resource advantages over current designs which utilise numerous ACLs, deep packet inspection rules or host intrusion protection agents. Through the definition and monitoring of application flows, the SDN capability can also handle proprietary protocol payloads and does not require host level intervention [22].

Traditional network security design approaches such as Defence-in-Depth, promoted in the ASD information security principles [27], and industry security standards such as ISA-99 or its successor IEC-62443 are still very much relevant. However, industry research and global trends point to virtualisation across compute, storage and network domains as providing a better foundation for reducing the complexity and cost for delivering, maintaining and supporting such secure networks. From a security standpoint, when the old is married with the new, the ability to secure centralised controllers, espoused as a primary risk to SDN’s, becomes a possibility in which the benefits of SDN and convergence seem to outweigh the negatives.

Conclusion

The body of work surrounding IT/OT convergence, virtualisation and software defined networks suggest considerable advantages to organisations with the courage to undertake such an endeavour. Technological and cultural changes are required to seize the commercial opportunities present in IoT, data analytics and mobility whilst also delivering simplified management, automation and improved security control. It is clear that organisations seeking to converge IT and OT organisational functions without also addressing technological underpinnings are likely to realise minimal management and cost savings.

There is no denying that organisations engaged in such convergence will face considerable challenges with the transition. Strong organisational change management will be necessary to navigate the initial team changes and beyond, with team members struggling to accept change to team dynamics and adjusted boundaries of responsibility. Stalwarts of production control separation will continue to push back on such changes and challenge the maturity of IT solutions which in most cases can meet, or exceed, OT requirements. Organisations will require strong leadership to challenge the status quo, and relegate fear based opposition from traditionalists struggling to retain relevance in a new converged world.

Technologically, vendors and suppliers across most domains are embracing convergence through “software defined” capabilities and virtualisation to reduce management overheads and optimise for change. IT Networking and virtualisation companies recognise the opportunity in industrial control markets and have increased their focus on real-time performance, security and reliability. All research reviewed suggests that the primary challenges for organisations seeking convergence will be in cultural and personal domains, not technological ones. This however requires strong leadership commitment and a transformation structure with the authority to traverse individual opposition and deliver to business outcomes.

References

[1] Gartner Inc., “IT and Operational Technology: Convergence, Alignment and Integration,” Gartner inc, 2011.

[2] CIO.com, “CIOs key to bridging the IT/OT divide,” 1 June 2017. [Online]. Available: https://www.cio.com.au/article/620112/cios-key-bridging-it-ot-divide/.

[3] R. Crozier, “Roy Hill to back IT-OT integration,” ITNews, Mar 2017. [Online]. Available: https://www.itnews.com.au/news/roy-hill-to-back-it-ot-integration-456400. [Accessed Feb 2019].

[4] R. Crozier, “BHP Billiton to bring IT-OT to east coast mines,” ITNews, Jan 2017. [Online]. Available: https://www.itnews.com.au/news/bhp-billiton-to-bring-it-ot-to-east-coast-mines-447788. [Accessed Feb 2019].

[5] S. Wekare, “IT and OT Convergence: The case of implementing the connected enterprise,” University of Dublin, 2016.

[6] [client author], “IT Strategy,” [the client], 2018.

[7] B. Marr, “What is Industry 4.0? Here’s A Super Easy Explanation For Anyone,” Forbes.com, Sept 2018. [Online]. Available: https://www.forbes.com/sites/bernardmarr/2018/09/02/what-is-industry-4-0-heres-a-super-easy-explanation-for-anyone/#a1b26319788a. [Accessed Feb 2019].

[8] Rockwell Automation, “The Connected Enterprise – Bringing people, processes and technology together,” Rockwell Automation.

[9] Rockwell Automation, “The Connected Enterprise Maturity Model,” Rockwell Automation.

[10] Meet the boss TV, “Standardized and Connected – Manufacturing Success,” Meet the boss TV in association with Rockwell Automation.

[11] R. Kranendonk, “The Convergence and Integration of Operational Technology and Information Technology Systems,” Delft University of Technology, Delft, Netherlands.

[12] ATOS, “The convergence of IT and Operational Technology,” ATOS, 2012.

[13] OSIsoft, “Challenges, Opportunities and Strategies for Integrating OT and IT with the Modern PI System,” OSIsoft LLC, San Leandro, CA, 2015.

[14] B. A. A. Nunes, “A Survey of Software-Defined Networking: Past, Present, and Future of Programmable Networks,” IEEE Communications Society, 2014.

[15] H. Forbes, “Virtualization and Industrial Control,” ARC Advisory Group, Jan 2018. [Online]. Available: https://www.arcweb.com/blog/virtualization-industrial-control. [Accessed Feb 2019].

[16] J. Kempf, “Best Practices for Virtualization in Process Automation,” Process-worldwide.com, 2014. [Online]. Available: https://www.process-worldwide.com/best-practices-for-virtualization-in-process-automation-a-426557/. [Accessed Feb 2019].

[17] Wind, “Requirements for Virutalisation in Next-Generation Industrial Control Systems,” Mar 2018. [Online]. Available: https://storyscape.industryweek.com/wp-content/uploads/2018/03/requirements-virtualization-next-gen-industrial-cs-white-paper.pdf. [Accessed Feb 2019].

[18] M. Salmenperä, “Virtualisation Applicability to Industrial Automation,” Jan 2019. [Online]. Available: https://dspace.cc.tut.fi/dpub/bitstream/handle/123456789/23723/Lappalainen.pdf?sequence=1. [Accessed Feb 2019].

[19] M. Ištok, “VMWare vSAN: Your Modern Storage,” [Online]. Available: https://www.delltechnologies.com/content/dam/delltechnologies/images/forum/emea/presentations/sk-sk/itt-09.pdf. [Accessed Feb 2019].

[20] Centre for Cyber and Information Security, Norwegian University of Science and Technology, “Prospects of Software-Defined Networking in Industrial Operations,” International Journal on Advances in Security, pp. 101-110, 2016.

[21] H. Sandor, “Software Defined Response and Network Reconfiguration for Industrial Control Systems,” in Critical Infrastructure Protection XI: 11th IFIP AICT, 2017.

[22] A. Aydeger, “Software Defined Networking for Smart Grid Communications,” Florida International University, 2016.

[23] G. Kalman, “Prospects of Software-Defined Networking in Industrial Operations,” International Journal on Advances in Security, vol. 9, pp. 101-111, 2016.

[24] R. Pujar, “Path protection and Failover strategies in SDN networks,” in Open Networking Summit, Santa Clara, CA, 2016.

[25] D. Geer, “Five reasons IT pros are not ready for SDN investment,” TechTarget, 10 2014. [Online]. Available: https://searchnetworking.techtarget.com/feature/Five-reasons-IT-pros-are-not-ready-for-SDN-investment. [Accessed Feb 2019].

[26] R. R. R. Barbosa, “Flow Whitelisting in SCADA Networks,” Aalborg University, Denmark.

[27] Department of Defense, “Australian Government Information Security Manual – Principles,” Australian Government, 2016.

[28] Network Computing, “SDN: Time to Move On, Gartner Says,” 3 Oct 2017. [Online]. Available: https://www.networkcomputing.com/networking/sdn-time-move-gartner-says.

[29] R. Chua, “From SDN-Washing to SDN-Hiding,” 18 Nov 2018. [Online]. Available: https://www.sdxcentral.com/articles/analysis/from-sdn-washing-to-sdn-hiding/2018/11/.

[30] N. Regola, “Recommendations for Virtualization Technologies in High Performance Computing,” in 2nd IEEE International Conference on Cloud Computing Technology and Science, 2010.

[31] K. Steenstrup, “2016 Strategic Roadmap for IT/OT Alignment,” Gartner Inc, 2015.

[32] Honeywall International, “Virtualization support for process control systems,” Control Engineering, 7 Sept 2011. [Online]. Available: https://www.controleng.com/articles/virtualization-support-for-process-control-systems/. [Accessed Feb 2019].

[33] P. Hubbard, “The case against SDN implementation,” TechTarget, Aug 2015. [Online]. Available: https://searchnetworking.techtarget.com/opinion/The-case-against-SDN-implementation. [Accessed Feb 2019].

[34] K. Ahmed, “Software Defined Networks in Industrial Automation,” 2018.

[35] M. Sainz, “Software Defined Networking opportunities for intelligent security enhancement of Industrial Control Systems,” Jan 2018. [Online]. Available: https://www.researchgate.net/publication/319251133_Software_Defined_Networking_Opportunities_for_Intelligent_Security_Enhancement_of_Industrial_Control_Systems. [Accessed Feb 2019].

What does it take to implement, and what do I need to consider from a development and management perspective?

With today’s AI-hype, one could presume that the development of Artificial Intelligence or Machine Learning is as easy as it is to develop a website today.

Salesforce.com has released Einstein, through acquisition, and is obviously positioning its offering within the context of CRM data. Though Einstein can only address certain problems at the moment, such as image recognition and customer service “bots”. It cannot be easily adapted to create a whole new model such as evaluating the validity of an insurance claim submitted via Salesforce Communities for example. It requires sophisticated development. I’m sure Salesforce’s research teams will be working hard to address this.

Google Cloud Platform, Amazon AWS, and Microsoft Azure all have Machine Learning compute services, which are absolutely necessary due to the GPU and Memory requirements of AI and ML, but again this relies on your business having the capacity to choose and develop the appropriate Machine Learning or Deep Learning models and then releasing them to these environments. Which highlights a good point that if your organisation hasn’t adopted a cloud compute strategy, you will likely be at a disadvantage when it comes to AI and ML development.

Frameworks have taken up a lot of the heavy lifting. Tensorflow, Keras, Caffe, Torch et al. are all a “god-send” for development, and each has its strengths (link). But they are just frameworks, which abstract away the complexity of the underlying mathematics that powers AI and ML (see matrix mathematics and back-propogation as examples), they still require considerable data processing and coding.

So it is unlikely that the future will see any of the aforementioned vendors release a simple and intuitive solution for general Deep Learning and Machine Learning development. Therefore its best to understand how to approach this within our organisations.

There are four important aspects that need careful planning and execution. Failure to undertake such care will lead to either failing to deliver the solution, or worse still, a solution that over time fails to meet the accuracy expected. The latter being disastrous in some scenarios. So here are the areas;

A. Understand your “why”

Foremost in the process is being very clear about the Question we are seeking to answer with Machine Learning or our Deep Learning implementation. The right question will drive data selection, algorithm and framework best for your environment.

B. Understand your Data Sources

In seeking to answer our question, the identification of data sources can be a lengthy process. It requires identifying our source data, shaping the data to suit our model type, and validating our data. If you’re interested in learning more about shaping data for this purpose, a good start is Tidy Data by Hadley Wickham that a colleague of mine put me onto.

Companies that already have a mature data pipeline and BI environment will be part the way there. Data pre-processing is a skill and requires a good toolkit. In future I predict you will see companies such as Zoomdata building real-time data pipelines specifically to support Deep Learning implementations – watch this space.

C. Correct Algorithm Selection

Not all algorithms are created equal, and there are a plethora of algorithms (here, here) that are best for different problem domains. This is where a sizable amount of time must be invested. It will determine whether Deep Learning algorithms (ReLU, Softmax or both etc), or Machine Learning (KNN, SVM, or K-Means clustering etc) algorithms are appropriate, each has its place. Choose the wrong one and you’ll have sub-optimal results

D. Integrating into existing methods

As with any number of scientific endeavours Deep Learning and Machine Learning requires a combination of Science, Experience based Intuition and Art. Estimating time to develop models will be difficult depending on the type of Question being asked (see above) and the experience of the team. On this basis it is well suited to rapid test and release, as prescribed by Agile methods.

A method for implementation

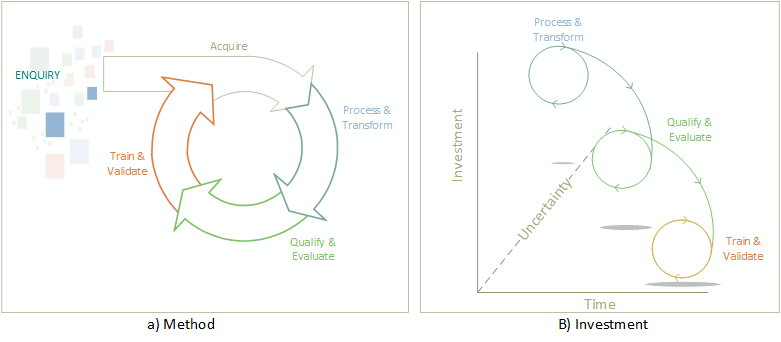

With these aspects of Deep Learning or Machine Learning adoption in mind, applying them in an agile method will require four (4) key steps (Figure 1.a)

The method is triggered by an area of enquiry (our “why”), based on the a particular business problem and available data.

Acquire Stage: The formal identification of data sources, both public and private that can be used in the implementation. With most implementations, you need a significant amount of data to Train, Test and Validate our model. Defining the sourcing strategy, for the initial development and incremental training once complete will be necessary.

Process & Transform Stage: Entails data pre-processing of data sources and shaping data. This will result in identifying the required features necessary to train our model and normalising our values. The output of this stage is essential for evaluating our approach.

Qualify & Evaluate: This stage requires that we evaluate our input data, remove outliers that will impact our accuracy, and determine which algorithms and algorithm/s is best to answer our question. Visualising our data is essential. Note there is often multiple algorithms used depending on your solutions. For example, Deep learning will use different algorithms in layers of our model. A team may spend a large amount of time to evaluate different algorithms, so patience is required.

Train & Validate: The development of the model with the approach decided is undertaken in this stage, using our separated datasets and we evaluate the predictions accuracy. We choose our hyper-parameters, and adjust based on training results. Training a model can take time, and often requires significant intuition as currently its largely a manual undertaking, though the actual coding effort is generally not large when you have an experienced practitioner.

The investment required in execution leans heavily towards the design stage (Figure 1.b above). As we iterate through the phases we reduce our uncertainty and our pace of execution will increase. Once we have a production solution, the pipeline to retrain the model is already build and therefore should be largely automated. Yes, I did say retrain the model – as you gather more data your need to retrain to improve prediction accuracy depending on the type of algorithms used.

It’s an exciting endeavour, and one that can drive enormous benefits if you appreciate the steps to get there. It is still an emerging field, and there are limited toolsets that make development simple, but with the right team you can get the results.

Originally published by the Enterprise Architecture Leadership Council @ Corporate Executive Board – Jan. 2014\

There are many quotes that could be used as an allegory for EA; the ubiquitous “fail to plan, plan to fail” comes to mind almost immediately. However, another quote that comes right to my mind is one from Mike Tyson in 1987: “Everybody has a plan until they get punched in the face.”

Mike Tyson was referring to the cold hard reality that hits you when you’re standing squarely in the ring. You’ve finished preparing for the fight and all that’s left to do is see it through to the bitter end. The power in Mike Tyson’s punches was so great that an opponent would be lucky to be standing, let alone remembering his fight plan, after just one hit.

For a boxer, the fight plan is based on certain conditions, expectations, and parameters, as well as a presumption of his/her preparedness for the fight. The plan is formulated through close engagement with a number of important specialist team members—a physiotherapist, nutritionist, psychologist, maybe a performance analyst, and most importantly, the coach. Collectively these specialists could be considered the strategists of the boxing ring, with expertise in delivering the tools and knowledge for their boxer to prevail, round after round.

With that strategy in place, the boxer can then be considered the tactician. Responsible for understanding the plan, and committing to the plan to ensure he/she has the objective in mind and the greatest opportunity for success. But once the boxer is in the ring, ultimately, the tactics to reach the end goal will need to be adjusted based on real-time changing conditions.

However, that doesn’t mean the Boxer is left to his or her own devices during the fight. After each round, there is time for the coach and team to consult and advise the boxer, and for the boxer to get advice from the team on his/her condition and their observations of the opponent. Smelling salts may be provided to sharpen the senses, petroleum jelly applied to stop bleeding, and wrists may be re-strapped where the boxer is experiencing discomfort. This consultation occurs round after round so that strategy revisions can be decided along the way, based on expected point count.

Mike Tyson’s famous quote and the way a boxing team approaches a fight is particularly relevant when we consider the disciplines of Enterprise Architecture (Architecture Planning) and Solution Architecture (Architecture Delivery) and how they should work together. Enterprise Architects are the strategists, the team of specialists that formulate the plan, while the Solution Architect is the tactician, the individual that must use his/her available resources to execute to the plan to realize the desired objective. Of course, there are areas of specialization in each of these disciplines, however, the nature of the relationship that should exist between Architecture Planning and Architecture Delivery is still the same.

As with my analogous boxing team, there are many types of strategists that we refer to as Enterprise Architects. They have particular backgrounds that are necessary within an organization at a point in time. They are typically highly experienced in their domain and are in touch with industry directions and their domain of expertise. In the same way, Solution Architects, our tacticians, have a specific skillset. They interpret the plan, ask questions, focus on areas of uncertainty and risk that need mitigation, raise issues with strategists, and use all the resources made available to him/her to successfully execute the strategy.

Drawing further parallels with our boxing team analogy and these architecture disciplines, the strategists of the boxing team are not locked in offices, engaging almost exclusively with senior executives, drawing pictures of each round and recommending punch combinations to the boxer based on previously observed knock-outs. They don’t just show themselves on rare occasions before and during the fight to answer questions with an air of righteousness. No, as the analogy expressed, the strategists are committed to the boxer as much as the boxer is committed to the plan. They ensure that the boxer has everything he needs before the fight, during the fight and then after the fight, and if need be, the strategist may just have to help stop a fight by “throwing in the towel,” because the strategist is as deeply committed as the person throwing the punches.

But let’s be honest, there aren’t such close relationships between Enterprise Architecture and Solution Architecture disciplines in enterprises today. So how does such a chasm develop between Enterprise Architecture and Solution Architecture?

Firstly, it’s important to highlight that I don’t believe the current situation is a failure of individual professionals. For many enterprises, with the rush to adopt Enterprise Architecture frameworks as a means to improve Architecture Planning, many organizations have failed to take stock of what is required to execute the plan, or in other words, what the Architecture Delivery disciplines require to be a success. This has led to many Enterprise Architects having a uniquely hands-off approach, without any responsibility or accountability for realizing the plan.

Keen observation across a number of enterprises identifies many factors that have contributed to this situation. Here are a few:

- Lack of organizational ability to quantifiably define and measure EA Practice performance

- Failure to appropriately adapt a proven Enterprise Architecture Framework to the organization

- Adoption of frameworks that do not sufficiently address the interfaces with Architecture Delivery

- Inexperience of practitioners in architecture delivery

- EA practitioners who have spent too much time abstracted from the reality of architecture delivery.

As Enterprise Architects, and leaders of Enterprise Architecture practices of varying maturity, perhaps we need to take stock of, and measure our worth against the strategists of the analogous boxing team. Here are a few areas to reflect upon.

Teaming

- When was the last time you sat beside an Architecture Delivery project team for an extended period of time and actually mopped up the proverbial blood with Solution Architects?

It is only through spending time embedded in Architecture Delivery roles and activities that we can maintain relevance to a field of experienced professionals who have dedicated their careers to delivering outcomes that are unquestionably measurable. It is a mistake to think that as Enterprise Architects we are more experienced and knowledgeable than a Solution Architect, because the disciplines require different types of experiences. - Have you established the correct team composition for your Enterprise Architecture practice? Have you covered the bases necessary for your Architecture Plan?

Diversity of experience and specialization is important, but so are high levels of emotional intelligence. A team lacking the ability to develop personal relationships and read non-verbal signs of stakeholders and project delivery teams is limited in its ability to identify and address issues early. It should no longer be acceptable to dispense with our responsibility for seeing the plan become realized for the enterprise; and close working relationships are critical to doing so. If the team composition is not right to realize the plan, it needs changing.

Resources

- What physical architecture artefacts (models, patterns, standards, etc.) have you published in a way that can be effectively reused by the Solution Architect?

Heading into 2014, it is surprising to find many large organizations that have failed to establish a formal, structured, repository for architecture storage, management, interrogation, and retention. An architecture that cannot be reused is ultimately devalued. - What tooling have you made available for the management, discovery, and reuse of architectures?

The continued exclusive use of Microsoft Visio or, worse still, a variety of non-integrated tools depending on the type of Architecture being developed, will continue to degrade the value of enterprise architectures. Do boxers continue to use the same old boxing gloves and training methods of the days of old, or do they adopt new proven glove technology and training approaches to maximize the opportunity to be successful? - Have you formalized an Architecture Delivery framework and/or standard?

Regardless of how implementation is undertaken, without a defined process and templates for Architecture Delivery that ensure completeness, consistency, and domain coverage, Solution Architecture will be less than efficient or lack demonstrable alignment to the Architecture Plan. Like Architecture Planning frameworks such as TOGAF and FEAF, there are Architecture Delivery frameworks such as DoDAF and/or methods and standards such as 4+1 and ISO/IEC 42010 that provide guidance and rules for defining, structuring, classifying, and organizing architectures.

Processes

- What processes have you implemented as a formal method for incorporating change into published strategies, standards, and architecture models based on Solution Architecture feedback?

This is more than communities of practice and weekly conference calls. Often there is too much talk and not enough action. Structured and actionable methods of change initiation, review, and approval need to be implemented and, of course, measured. Otherwise Architectures become stagnant. - What processes or practices have you implemented to identify, rapidly respond, and support Solution Architects when risks and issues are raised?

How often do you review the risk and issues log of projects and call the Solution Architect and enquire about delivery progress. The difference lays in how active and engaged we are in the realization of the Architecture Plan. Personal engagement and commitment to the success of a program/project builds an Enterprise Architects credibility.

There is no doubt there are many areas in which Architecture Delivery practitioners can also improve. This discipline warrants a deeper assessment of its own. Nevertheless, as practitioners of Enterprise Architecture we need to do more than can be reasonably defined within an EA Framework.

If we are, arguably, viewed as senior representatives of ICT Architecture in an enterprise, then we must look beyond abstracted role definitions and instead critique our purpose and performance within the context of the support we provide Architecture Delivery practitioners in the achievement of tangible business solutions, outcomes, and benefits. For ultimately, what good is the perfect plan if we’ve not considered what will be required to achieve success once our fighter is in the ring?

“The effect of uncertainty on objectives.”

This is particularly appropriate within the context of defining Architectures. I’d go so far as to assert that reducing uncertainty is one of the fundamental axioms of architecture, and therefore the role of an architect. Some simple examples to explain;

- A Solution Architecture will reduce business risk relating to a solutions ability meet particular requirements (objectives). Said requirements, typically defined across business, functional and non-functional aspects of a solution, collectively deliver to an organisational outcome. The architecture will reduce uncertainty regarding business interfaces, deployment and necessary support structures.

- A Technology Strategy, and accompanying road-map, reduces uncertainty relating to required technology investment for ICT capabilities necessary to support the business objectives over a prescribed planning horizon. Therefore, such planning reduces organisational risk that ICT investment is ill-directed.

- A Segment Architecture for Integrated Customer Service will reduce executive uncertainty regarding the level of ICT systems and organisational change required to support a new customer service division. The endorsed segment architecture will improve the organisations chances for success before venturing into a change program and therefore reduce the risk of abject failure.

Therefore it is a reasonable assertion that through the minimization of uncertainty, relating to an architectures ability to meet business objectives, the Architecture Practitioner is fundamentally managing and reducing risk.

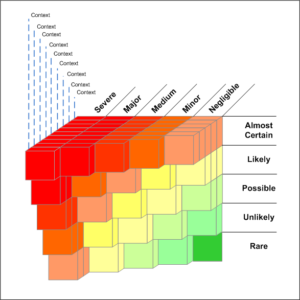

If you accept this axiom as true, you then begin to question the application of risk management principles to the way in which architectures are created. Through the adoption of a risk driven approach, an Architecture practitioner can address organisational concerns by focusing on components of the solution prioritized through risk assessment criteria.

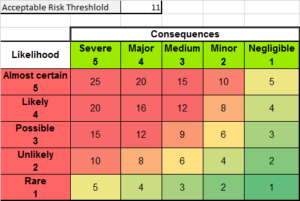

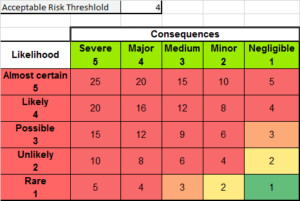

Every organisation will have their own risk appetite; The level of risk they are happy to carry through different types of engagements. The lower the risk appetite the more complete the architecture needs to be, and of course the inverse therefore applies. A simple risk matrix with analysis axis included below by way of example. As in Figure 1, where the likelyhood is low, and the impact is negligible, only light-touch analysis and design would be required. However if a component of the architecture has a major impact, and is likely to occur, then considerably more analysis and design will be required.

To build on this concept, an “Acceptable Risk Threshold” would represent an organisations risk appetite, and would be used to guage the level of analysis necessary within the architecture scope. As with Figure 1, the risk appetite is quite balanced, where only components of an architecture which are likely-or-almost certain, and a major-to-severe impact requiring the most analysis. Graphically this can be viewed in Figures 2 and 3, which show organisations with different Acceptable Risk Thresholds. Hopefully you can tell, from the images, that Figure 2 represents an organisation that has a low risk threshold (4). Figure 3 however is more middle level and somewhat reflective of the risk threshold in Figure 1 above with a higher risk threshold (11).

Such an approach is a considerable departure from standard practices. To support such an approach however would require an organisation to be quite structured in their architecture practice, and requires additional upfront planning and analysis in risk assessment of the components that make up the architecture. Standardized views and viewpoints, modelling notation and analysis methods would need to be in place, and the risk threshold could then be applied to the development of Statements of Architecture Work. Governance bodies would perhaps have a better means through which to assess completion of the Architecture, rather than a mere “tick-n-flick” that a document has been completed.

Undertaking Architecture itself will inevitably reduce implementation risk; though perhaps through the adoption of a Risk Driven Design approach, an Architecture will also be more likely to address the organisations needs through focusing effort on those areas that represent the greatest risk, rather than creating overly burdensome Architectural deliverable’s. Sure, the concept itself requires further development to prove within a working environment, however the basis behind the approach would seem to warrant further exploration.

By way of example, sometime ago I was requested to assist two large programs of work delivering distinctly separate line of business applications. Each program of work was a multi-year and multi-million committed by the enterprise. Whilst the business case and initial scope of work for each program had identified bi-directional systems integration as a significant enterprise benefit, a lack of strong stakeholder support resulted in each program of work de-scoping integration due to schedule and budgetary pressures.

With scope adjusted the architects for the solutions did not consider integration concerns within the architecture. In fact any consideration to integration was so minimal that in future it would eventually turn out that there were significant limitations in the level of integration that could be achieved in one of the platforms. Significant business benefits were at risk of not being realised.

This is just one example where program governance decisions made during program delivery have directly impacted the longer term enterprise architecture vision. Whilst I won’t go into detail of the activities required to take corrective action for the programs in my example, or the political turmoil that ensued, it brings to light shortfalls in the governance frameworks adopted in such organisations.

Whilst the difference between Architecture Governance and Program Governance in large programs of work is a topic for a separate discussion, for this post however I’m more interested in exploring what I believe individual practitioners can do to mitigate deficiencies in governance decision making.

As individual architecture practitioners, it is unrealistic to think we can always influence governance decision making to favour architectural outcomes. You win some, and you lose some, however we can consider some of the following practices as mechanisms to protect the architectural integrity in the interests of the enterprise vision;

“Bottom-up and Top-down” awareness

First learn the meaning of what you say, and then speak – Epictetus

Today more than ever, it is essential to remind ourselves that as Architecture practitioners we all play a part in delivering to an enterprise architecture, regardless of role and position description. Therefore practitioners need to be conversant and supportive of each architecture discipline and its purpose within an enterprise. This is necessary as markets become more dynamic and enterprises seek to become more responsive or ‘agile’.

Program Architects and Solution Architects need to be conversant in enterprise architecture parlance, the framework adopted within the enterprise and the published Enterprise Architecture artifacts such as road-maps, capability maps and motivation models etc. Armed with this knowledge, the Architect will be more prepared and capable of challenging potential Program/Project scope changes that could impact the integrity of the enterprise architecture itself.

On the flip side, Enterprise Architects should be well-informed of the business, technical and governance challenges that will exist in the delivery of solutions that have been scoped as part of the Enterprise Architecture planning process. Through the formulation of the roadmap and scoping of required program/s of work, the Enterprise Architect, or nominated Program Architect, must ensure that dependencies are identified and risks associated with delivery are acknowledged early. This not only provides a more considered foundation for a Solution Architect to begin with, but also ensures that downstream impacts of the program delivery structure have been considered. Deficiencies in the aforementioned knowledge domains and a lack of investment upfront in program of work planning, especially in the business and technical dimensions, will limit the Enterprise Architects ability to advise and support architecture practitioners when confronted with problematic governance decisions.

Architecture Scope IS NOT Program/Project Scope

Whether a practitioner is engaged as an Enterprise Architect, Program Architect, or Solution Architect, his/her initiative is typically bound within the scope of a particular planned initiative. However the initiative scope should not be the sole basis for the scope of architecture analysis. This assertion, I believe, applies to all architecture disciplines.

The program/project scope is, typically, defined along Schedule, Cost and Quality dimensions (I’ve simplified here in the interests of brevity). Each of these aspects of a program/project scope are important to manage the delivery of the end solution, however I’m yet to see any program/project management plan or statement of work that includes “realising enterprise architecture outcomes” as a project objective. This is why the Architecture practitioner is typically responsible for ensuring the ongoing alignment of the solution with the enterprise architecture.

Take the Enterprise Architect required to define a Segment Architecture for a new Service Management business unit (example figure below) which includes business services that are consumed by external stakeholder groups (customers) and interacts with a number of internal services to deliver said services.

The definition of said architecture would be undertaken within a defined engagement scope or conceivably encapsulated within a broader strategic initiative. However should the desired internal services be revised to a subset of those originally intended, the architecture should continue to consider the de-scoped services regardless. The extent to which the architecture analyses and defines these services may be constrained to Use Cases only, for example, depending on the level of risk it represents to realising the longer-term business outcomes. Without any type of analysis, the architecture defined could limit the enterprises ability to eventually address the previously de-scope internal services or, worse still, lead the enterprise into a whole new change program.

Similarly, a Solution Architect required to define an Architecture for a new line of business application would be engaged within the scope of an approved delivery project. In the event the project scope is revised to exclude important functions that have a broader strategic benefit, the Solution Architect should continue to include these functions within analysis and definition activities. Again, consideration of the functions may be at the Use Case level, or even System and Sub-system context. Either way a level of analysis that is commensurate to the strategic importance of the function should be undertaken.

Through accepting that an architecture should not be explicitly constrained by program/project scoping dimensions, practitioners can produce architectures of greater enterprise value and reduce potential for the re-occurrence of examples like the one I provided at the start of my post.

Understand the political landscape

Within any delivery program/project, the architecture practitioner is critical to successful delivery through the removal of uncertainty as it relates to Business, Information, Application and Technical dimensions. Understanding the political landscape inside and outside the program/project in which the practitioner is engaged is important to understanding how to influence governance decision-making.

Practitioners need to work as peers with Program/Project managers, regardless of the project or business hierarchy. An Architect needs to have the courage to challenge decisions that may impact the ability of the architecture to deliver to business outcomes. This will lead to a tension between the roles, however this should be viewed as natural given the distinctly different focuses of the disciplines. An architecture practitioner who understands such a working relationship is more empowered to challenge decisions through appropriate channels, not just operate as a subordinate to a Program/Project Manager.

Similarly, close relationships with business stakeholders will help influencing decision makers. This is not just IT stakeholders, but those who will be the consumers of the solution itself. In some instances access to stakeholders may be limited based on program/project structure, however you should take any opportunity you can to access said stakeholders and become known to them. Having close relationships with business stakeholders is critical to being able to influence governance decision-making.

Finally, with the aforementioned knowledge of enterprise architecture practice, start leveraging the Enterprise Architecture practice in the way it was intended. Establishing a close working relationship with the Enterprise Architect who is responsible for the domain in which you are working is the first step. Engage them directly, regularly and actively regarding architecture scope, risks and issues. An engaged Enterprise Architect can assist in opening doors to stakeholder groups and tackling governance decisions that may impact the enterprise architecture vision.

Architecture Practitioners of all levels and experience can support the delivery of successful business outcomes greater than the development of architecture deliverables constrained by the initiative scope. As practitioners we must do more to deliver an architecture that is aligned with an enterprise vision and should engage outside of a project construct to be adequately prepared to challenge decisions that may be an impediment to this objective.